Finally, after many months of wanting to do it, I made it: I moved my Umami instance from a VPS with Docker on DigitalOcean to a pod on PikaPods.

Introducing

Umami is a great privacy-respecting analytics service that can be self-hosted, lightweight, and detailed enough for me.

PikaPods, on the other hand, is a great service that allows you to create pods (like Docker containers) with many open-source applications, at a very low price and with SFTP or database access (depending on the software used).

Note: I know nothing about databases like MySQL and PostgreSQL, and the little I do know I learned by making this transition. But if you simply need to convert a Umami DB, this post can help you.

Ok, but why?

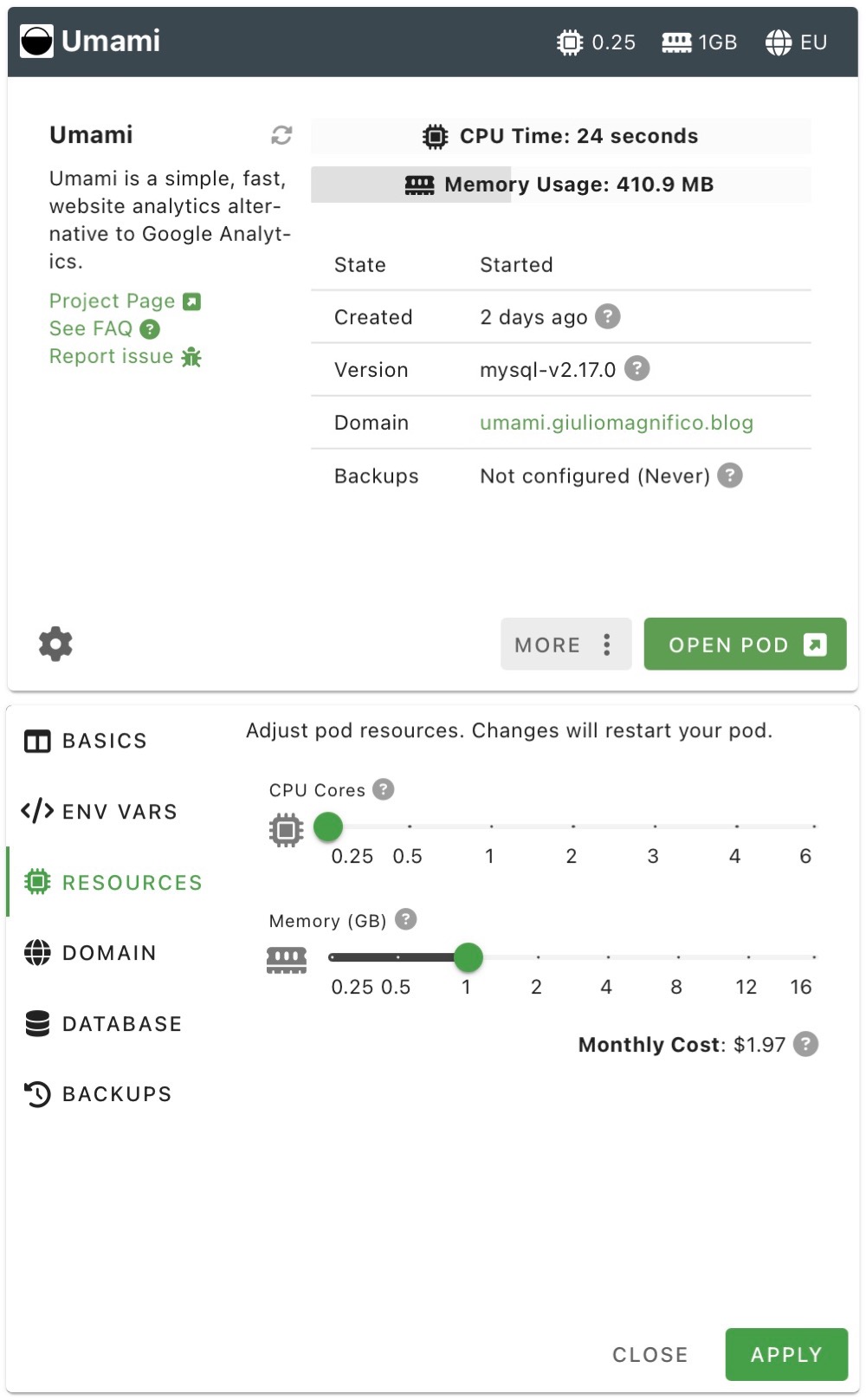

Well, basically because the VPS costs $7.20 per month, and the pods on PikaPods cost less than $2 ($1,97 or even less -$1,48- with the default 512MB of RAM, I added another 512MB to the pod). So there’s a nice saving (about €60/70 less annually), and also because the pod on PikaPods is much easier to manage. It updates itself, I don’t need to monitor the storage, I don’t have to worry about security, or something breaking after an update (which has happened before), and overall it’s easier to maintain.

My problem was that I had created the DigitalOcean Umami instance with a PostgreSQL database, while on PikaPods there’s only the option to use MySQL. So I would have had to start from scratch and lose 3–4 years’ worth of data/statistics from this blog, and I was not happy about that.

So my idea was to import the old DB into the new one on PikaPods, which is very easy to do—unless the DBs are different, like PostgreSQL and MySQL. If they are different, you need to make all the tables compatible, and it’s a huge work.

I tried to do it with various automated scripts, but in the end, none of them worked well, and I kept spending a lot of time testing (the tables didn’t have the right names and formats, so the import failed).

But now there’s ChatGPT …and everything is simpler, especially for these long and repetitive tasks. In fact, without ChatGPT, I would never have managed to do it.

Of course, ChatGPT made “a thousand” different mistakes. For example, sometimes I asked it to change all the site IDs in the SQL file, it took 3–4 minutes, gave me the file, I opened it, and it was exactly the same as before—or with an ID it had invented instead of the one I told it to use. Other times the files were completely empty, with only something like a comment saying “start database” and nothing else in the entire file.

So it wasn’t easy, but with a bit of patience and by guiding ChatGPT with the right prompts, I did it.

The steps

The data from the sites to be migrated were from two sources: the blog (giuliomagnifico.blog) and the portfolio (giuliomagnifico.it).

I accessed the database on DigitalOcean using:

docker exec -it umami_db_1 psql -U umami -d umami

Then I exported the DB sessions and events in CSV format (the following steps are only for the blog ID):

\COPY (

SELECT * FROM website_event

WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1' -- giuliomagnifico.blog

) TO '/website_event_blog.csv' WITH CSV HEADER;

\COPY (

SELECT * FROM session

WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1'

) TO '/session_blog.csv' WITH CSV HEADER;

After exiting the DB, I positioned myself in /home/giuliomagnifico and copied the .csv generated file to outside the container:

docker cp umami_db_1:/website_event_blog.csv .

docker cp umami_db_1:/session_blog.csv .

Then I made many attempts to convert small parts of the file into the correct format for Umami, and this is where I wouldn’t have been able to do anything without ChatGPT.

At first, the events were all distributed on a single day, then over five days, then the data about devices and locations wasn’t showing up… in short, it was a big mess that I managed to resolve through many attemps and errors.

In the end, I found the correct format for the tables and data, but the .sql file was 112 MB (for 3/4 years of data), so I (or rather, ChatGPT) split it into files with 10,000 queries each.

After many attempts, the final compatible query has been created as follows:

– Timestamp format conversion

-- PostgreSQL format:

'2023-02-09 20:52:35.328+00'

-- Converted for MySQL:

'2023-02-09 20:52:35.328'

– NULL and string sanitization

- Converted all "" and blank fields to NULL (without quotes)

- Properly escaped special characters in strings (e.g. ’ → ')

– Safe inserts with INSERT IGNORE

To avoid errors caused by duplicate PRIMARY KEY values (e.g., event_id, session_id):

INSERT IGNORE INTO website_event VALUES (...)

– Website ID correction

f6032ede-9ea2-405a-9a0e-c7fec2b43bb1 ← for giuliomagnifico.blog

– Session table conversion

Cleaned and converted:

- Fixed timestamps

- Escaped string values

- Converted blanks to NULL

- Ensured valid UUIDs

- Used: INSERT IGNORE INTO session VALUES (…)

– File splitting

- Split the final .sql dump into multiple files, each with 10,000 lines.

- Each file contains independent INSERT statements, one per line.

– Final example

Original PostgreSQL:

9d358964-3fa0-5dbe-9f3f-0584d6ace465,71aa5b5e-b113-4781-9e95-38fff4bcfb53,giuliomagnifico.blog,safari,Mac OS,desktop,3008x1692,en-US,US,,,,2023-02-09 20:52:35.328+00

coverted into MySQL-compatibles

INSERT IGNORE INTO session VALUES (

'9d358964-3fa0-5dbe-9f3f-0584d6ace465',

'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1',

'giuliomagnifico.blog',

'safari',

'Mac OS',

'desktop',

'3008x1692',

'en-US',

'US',

NULL, NULL, NULL,

'2023-02-09 20:52:35.328'

);

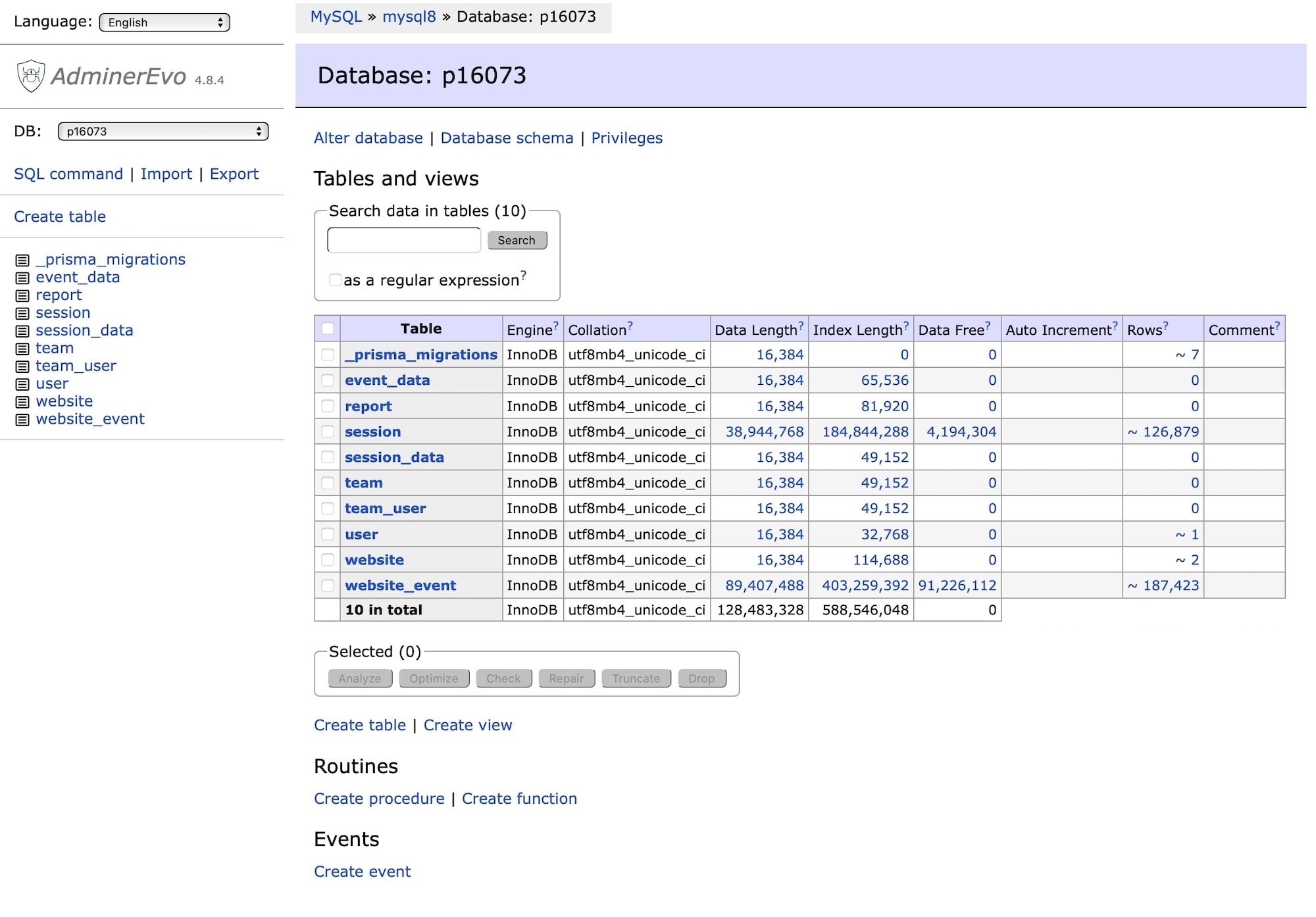

Then I accessed the Adminer interface provided by PikaPods:

And I cleaned up the entire database from the various attempts I had made, to prepare it for the final import.

DELETE FROM website_event WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1';

DELETE FROM session WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1';

A final check that must return 0 cells and tables:

SELECT COUNT(*) FROM website_event WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1';

SELECT COUNT(*) FROM session WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1';

And finally, I imported the files through the PikaPods Adminer interface, making sure to prevent the Mac from going to sleep during the process (just run caffeinate command in the terminal).

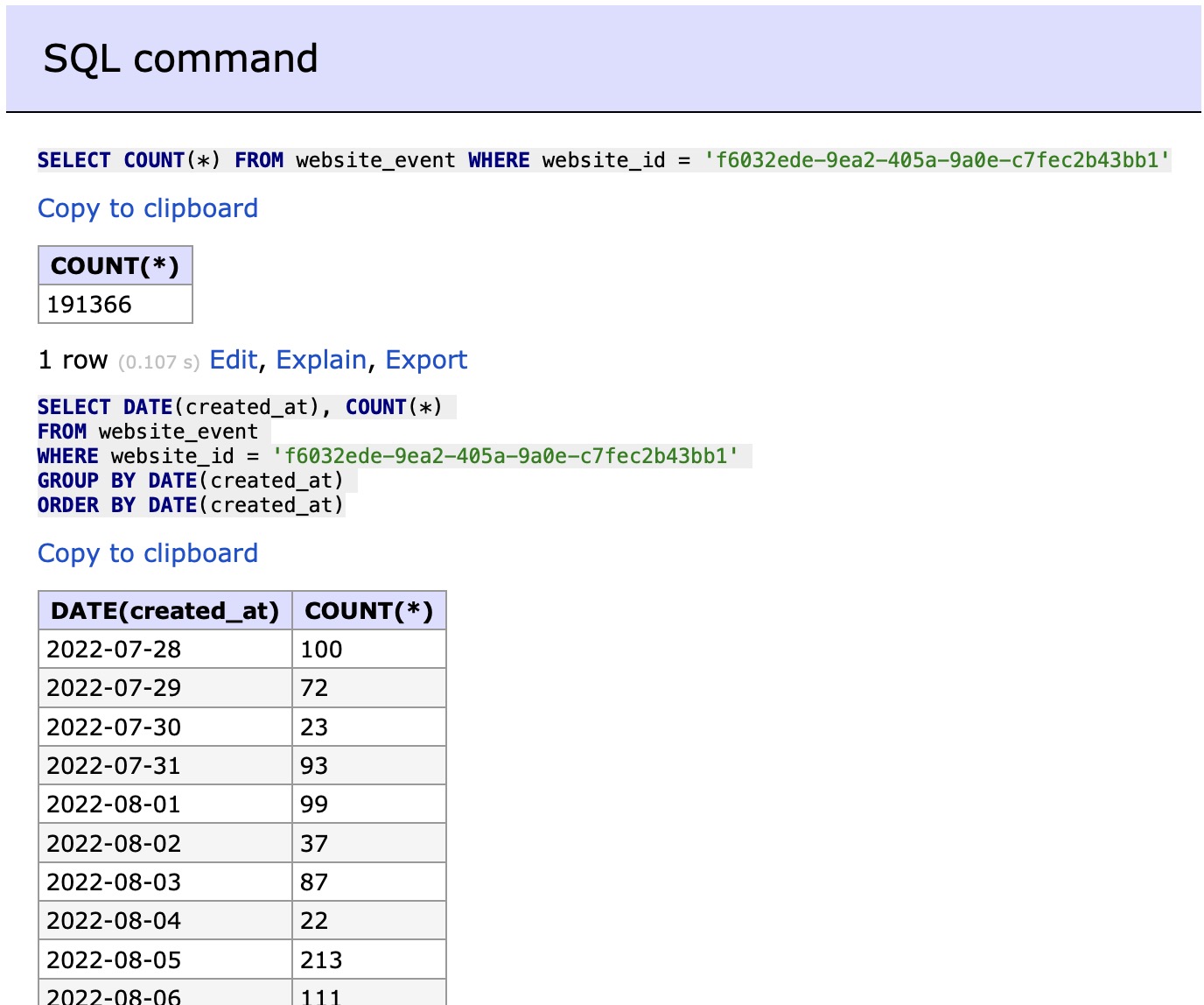

A final check in the database to ensure that everything was correct and not spread out over just a few days:

SELECT COUNT(*) FROM website_event WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1';

SELECT DATE(created_at), COUNT(*)

FROM website_event

WHERE website_id = 'f6032ede-9ea2-405a-9a0e-c7fec2b43bb1'

GROUP BY DATE(created_at)

ORDER BY DATE(created_at);

It must return a high number of events and columns, not just 2 or 5 in short:

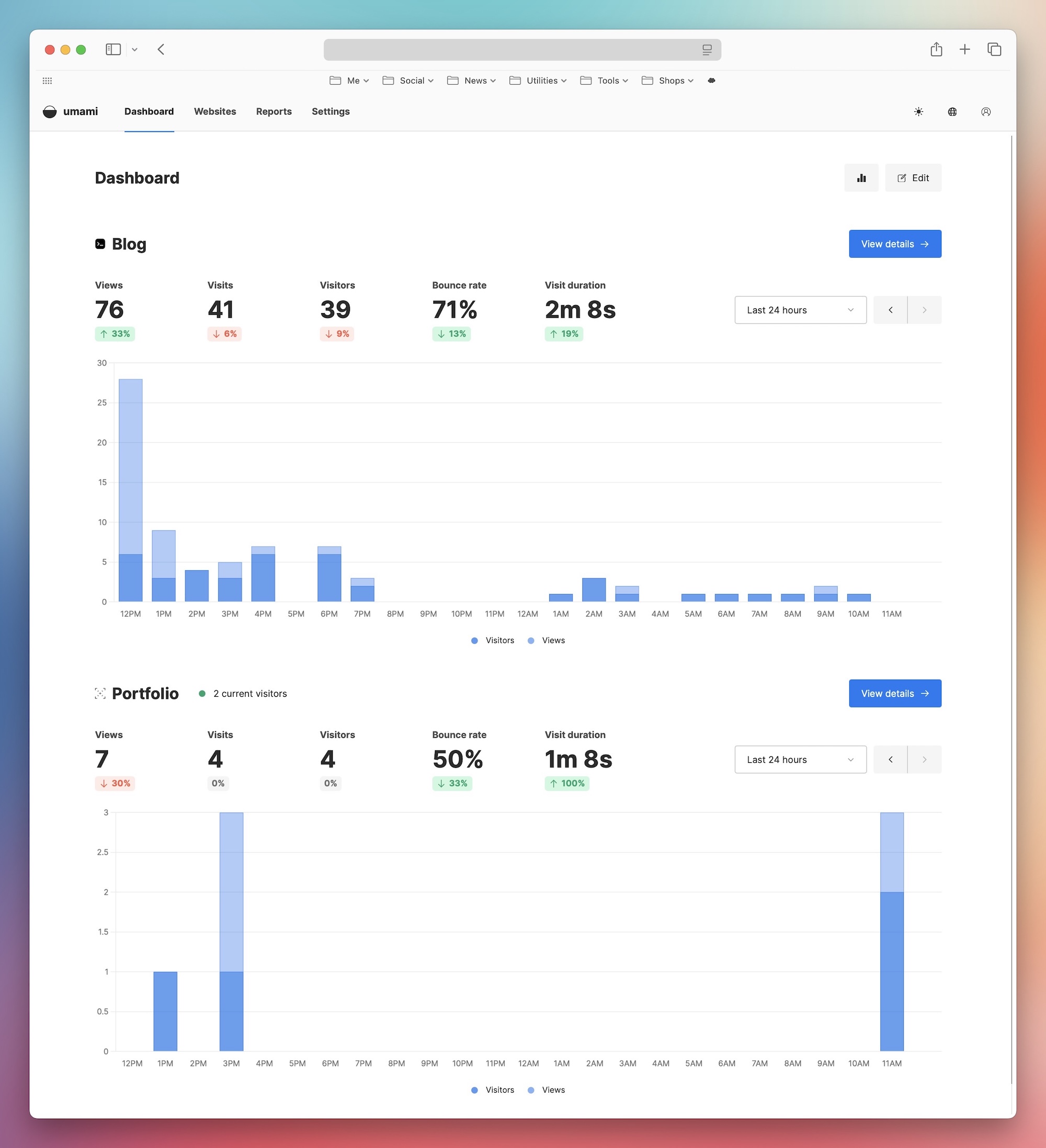

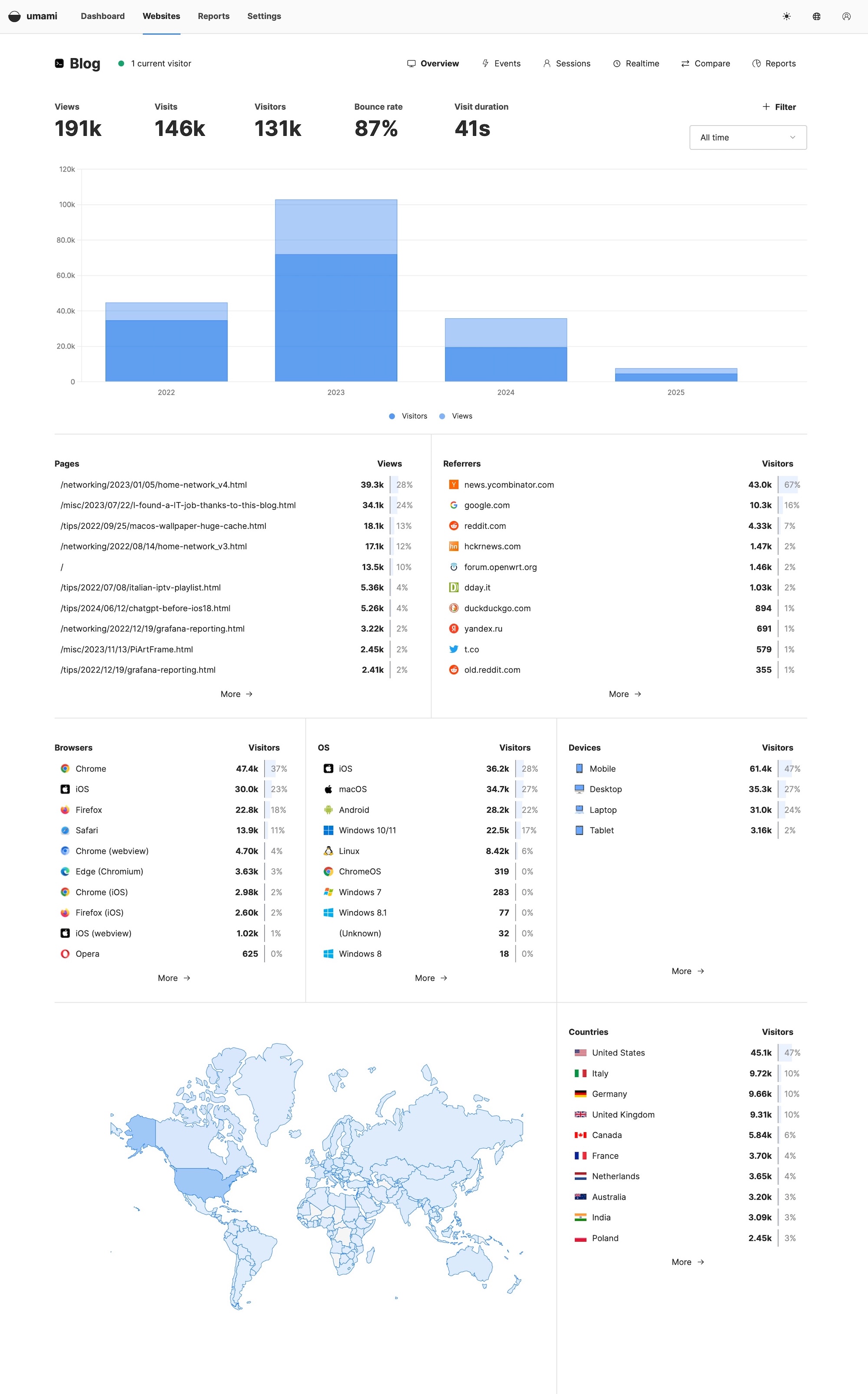

I waited around 2–3 minutes to make sure the interface had updated properly, and then all the data reappeared exactly as it was in the old PostgreSQL DB on DigitalOcean:

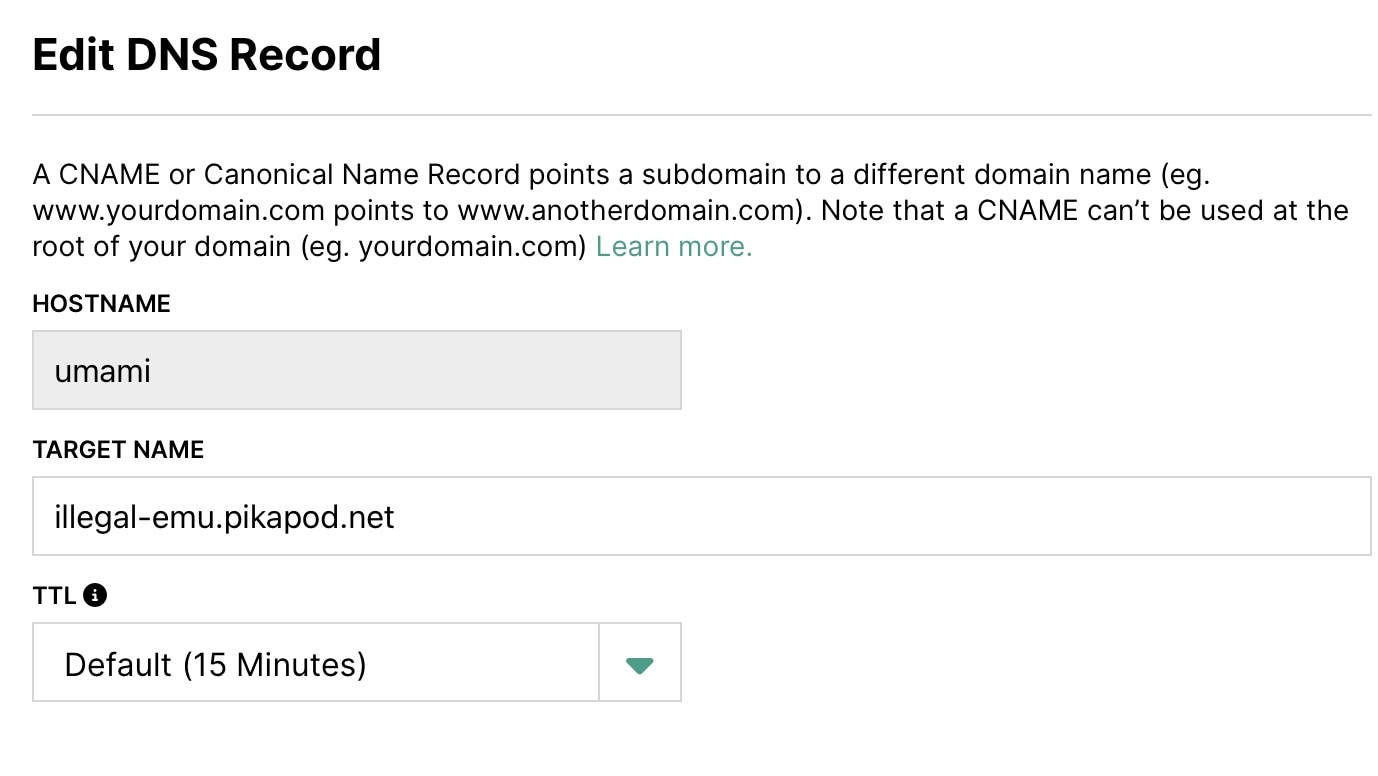

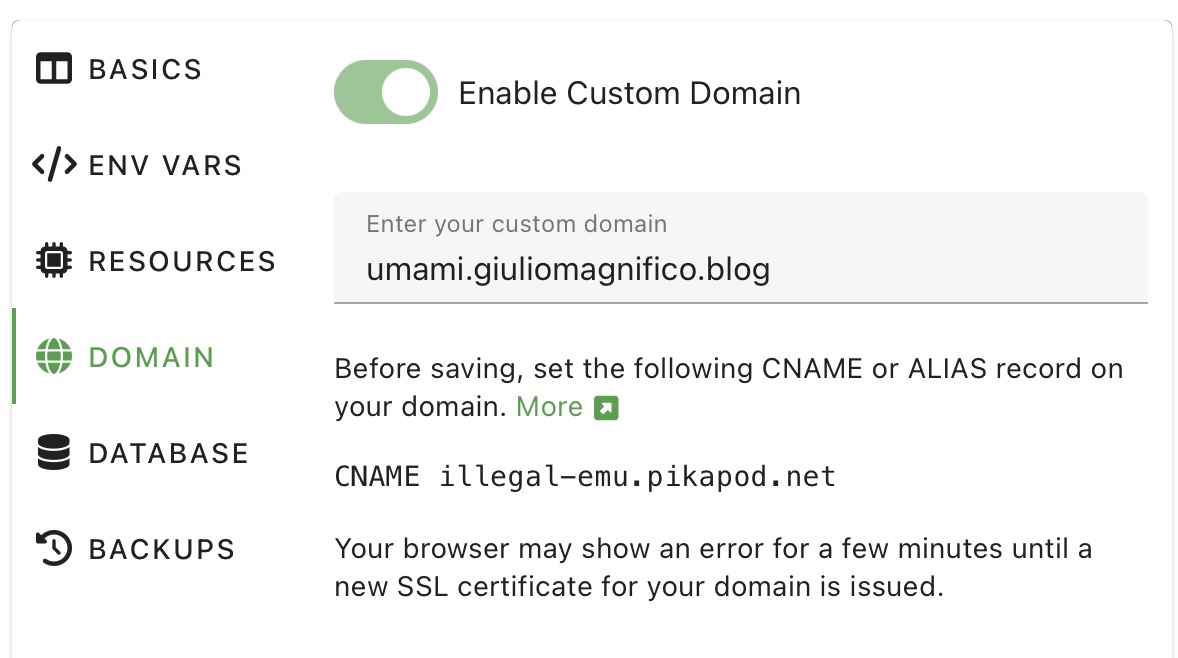

Subdomain change

Lastly, on DigitalOcean I was using a subdomain umami.giuliomagnifico.blog. I just had to delete the records on DigitalOcean, point the new CNAME from Hover to the PikaPods URL for umami.giuliomagnifico.blog, wait for the DNS update, and everything went back exactly as before.

This is my pod on PikaPods — I only added 512 MB of RAM to the default config, but it should run fine even with just 512 MB.